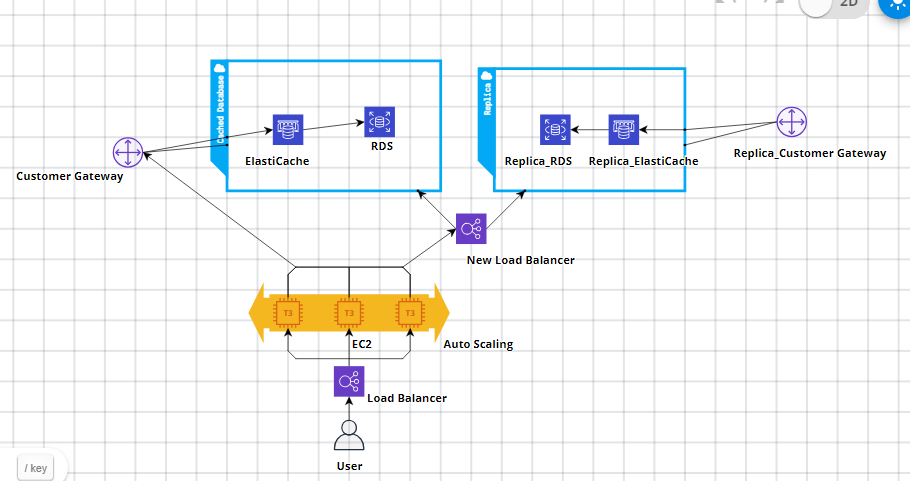

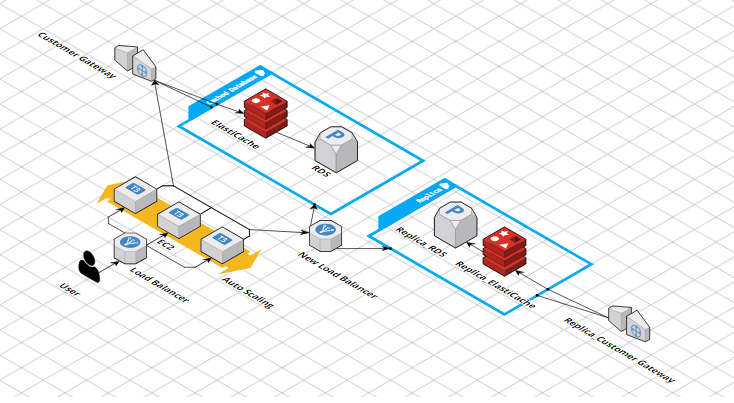

Cloud Architecture (made using Cloudcraft above- 2D AND 3D)

What is cloud architecting: Early 2000, amazon's E commerce website was a mess. They did not have proper planning. Then they realized the need of APIs that could organize the cloud infrastructure. Cloud architecting starts with identifying the business needs to manage their size and complexity. Tech deliverables with business goals should be aligned. That's where AWS comes in - creating highly reliable cloud architecture.

Features:

-> Highly secured - applies security at all layers.

-> Consistent approach -

-> Cost optimization - iterative process. Moving target. Keep lowering your cost.

-> Reliability

-> Performance efficiency - dynamically acquire computing resources to meet the demand. Maximizing your performance per demand. Employing mechanical sympathy.

-> AWS Well - Architected Tool: Gives you best practices, Operational excellence and need based questions -> gives you well informed decisions based on latest and best practices and architecture.

Design keys:

-> Empirical Data comes from load testing.

-> Enable Scalability - when demand is near the capacity, EC2 auto-scaling triggers alarm to launch a new instance before capacity is reached. you will be notified when resource allocation change.

-> Eliminate single point of failure. The application server needs to work even if one hardware component fails. You can This website is hosted on Amazon AWS Cloud S3 secondary or standby server.

-> treat resources as disposable

-> use caching

-> secure entire infrastructure.

This is the benefit over traditional servers, because somehow each server gets configured differently. In some cases, containers, or components solutions are needed instead of Amazon EC2. Other services include - Virtual Private Cloud, Elastic Block Store, FSx.

Cap-ex: you pay for the services whether they are active or inactive.

Variable services pay model- pay only for the services you need and stop paying when you don't use/need them.

AWS Regions:

-> Geographical area

-> AWS backbone

-> Data replication

-> Enable these regions.

-> AWS local zones are connected to AWS regions: Virtual Private Cloud, Elastic Block Store, FSx.

-> Core applications are deployed in N+1 servers to allow for redundancy

-> Points of presence: reginal edge locations - and regional edge caches (absorb the content and provide alternate to regional edge by caching content closer to the user) - throughout the globe to reduce latency.

->

Module 3:

Creating a static website that is hosted on amazon.com. Knowing the business requirements and creating a website using business requirements is the task.

Amazon S3 is object storage service ( unlimited) . Every bucket has a name that is globally accepted. But you cannot use generic names like Hello World. Total storage is unlimited but individual object is maxed out at 5 TB storage. Each object has a key, value, and metadata.

Key is used to retrieve the object. Version ID helps to retrieve the correct version of the object. Object values are unchangeable. If you want to modify the object you must make the change outside S3. REST enable global unique URL to each object.

Durability: 99.999999999 durability (11 9s)

Availability (99.999% of the time - 11 9s)

Scalability - unlimited capacity

Secutiy - fine grained access control

Performance: supported by many designs

S3 -> all S3 buckets are private and protected by default.

Always follow the principle of least privilege -> only give that access to the user that he/she needs.

TOOLS: Block Public Access: simple to manage access to specific users

IAM policies (Identity and access management policies): Good option when user can authenticate using IAM

Bucket Policies: To define access specific bucket or specific objects

ACLs: access control list: predate IAM (less used these days)

S3: Access points: You can configure access with names and permissions specific to each application

Presigned URLs: time limited access (temporarY)

AWS Trusted Advisor: to check any permissions have accidently have been given global access.

Default Settings:

OWNER -> PRIVATE permissions

Public Access -> ANYONE can access to the bucket

CONTROLLED ACCESS- > USER A has access but not USER B

DATA ENCRYPTION: Encrypted Data is unreadable : S3 encrypts the objects before it saves it and decrypts when it retrieves it. This is called server side encryption

STATIC WEBSITE: (no server side processing): versioning keeps multiple versions of the object in the same bucket. This is a low cost solution for website hosting.

S3 Bucket for website hosting -> HTML Files and Images -> S3 - high performance, & scalability.

Versioning - provides a way to recover data. Deleted version is marked up with a delete marker (doesn't exactly delete) -> you can always recover the deleted version.

CORS: cross origin resource sharing -> selectively allow cross origin access to resources in your bucket. Sometimes client access applications in one domain need to access resources in different domain. CORS configuration is xml document with rules to access your bucket.

S3: financial transaction analysis: horizontal data - enables multiple concurrent transactions - when the bid price for spot instances is low.

EC2 (elastic cloud): starts when the bid price for spot instances is low or EMR is started instead. Also, more data can be started from another data sources. Next, the data is run through different applications using different bucket. Next after the data is processed, then the compute capacity is terminated to optimize cost. Lastly, an analytics tool like Quicksite can help you visualize the processed data. Amazon S3 provides

In the example PUT, POST, and DELETE

Amazon EC2 Servers:

S3: objects that are uploaded to one region, are automatically updated to other regions. High Durability and accessibility. Redundant copies should update at the same time- in an ideal world. But there is a lag in the updates in all the copies. This inconsistencies of the data updated copies of the object is transient as some copies are updated, and some are updating. But this inconsistency is resolved really quick. S3 provides read after write consistency -> if you immediately try to read the object that you just updated, there are chances you would see an older version of the copy. So, if you delete some data, S3 may have the old copy still.

5 TB is the maximum size of single object. S3 provides for PUTS and DELETES. S3 is often used as a data store and sometimes used as archive service for critical data.

Amazon S3 Versioning: AWS Management Console -> Find Services - S3 -> AWS Management Console -> Universally unique name -> Select Bucket -> Properties -> Versioning - > bucket -> Enable - > if you delete (by going to ACTIONS) -> it removes the file -> but it still shows up in the "SHOW VERSIONS" with a DELETE POST TEXT.

S3: Storage data in amazon S3. S3 standard works well for websites, applications, big data analytics. Lower cost option for freq. access data.

S3 STANDARD IA (infrequent access): min 30 day fee. Storing older log files. Non critical data.

S3 Glacier

3 options for retrieving data:

Expedited - 1 to 5 minutes

standard - 3- 5 hours

bulk - 5-12 hours.

Deep archive: 3 copies at three different geographical locations. S3 monitors the access patterns for your objects. If the object in the IA tier accessed it automatically moves it to appropriate tier. The storage class is lowered based on the information retrieval rate.

Moving data into and out of S3: AWS management console - data can be moved by drag and drop commands.

Multi-part upload : min size 5 mb; allows to pause and resume; upto 5 TB

CLI Command: cp = copy

cp test.txt\s3://AWSDOC-Example-Bucket/test.txt (which file to upload and where to upload)

benefits: improved throughput

Amazon S3 transfer acceleration -> 50 to 500% improvement -> edge locations -> data is routed through optimized network path based on your location

It becomes useful when you have unused bandwidth available.

AWS snowball -> Petabyte scale data transport -> data vehicle

AWS Snowmobile -> exabyte scale -> pulled by semi trailer truck -> because it would otherwise take 3 years even with 10 GBPS speed over the internet.

Dedicated security personal and other features.

Architecture that runs a dynamic AWS application that runs with EC2: The cafe wants to include online ordering for customers. Their current architecture is S3 (simple architecture) which wont support this online ordering and viewing the orders.

Available compute options: virtual machine, serverless, containers, and

Virtual machines: EC2 - secure and resizable services with complete control

Lightsale: for application or website + cost effective monthly plan, simpler workloads

Containers: AWS elastic containers service, software package needed to run an application

Web application and services like Java, Ruby,

Serverless: Lambda and Fargate: docker services, without creating or managing servers you only pay when you manage servers.

AWS outpost:

AWS batch: runs AWS batch jobs of any scale

PAAS: enables quick deployment

EC2: resizable compute capacity and we can completely control the computing resources + you pay only for the capacity that you use. Its like elastic waist band that stretches automatically when the demand increases.

Most users run applications - there elasticity becomes handy. The CPU and RAM are in the cloud. These run as virtual machines which either run Amazon Linux or microsoft windows. You can run applications on each virtual machines or multiple virtual machines. Hipervisor is a platform layer that provides acccess to actual hardwares like RAM, storage, etc. It provides ephimeral storage. For the boot disk and

Some EC2 store use instance storage, the data is deleted when the instance is stopped. EC-2 block storage : EBS: the data will still be there even if the instance is restarted.

AMI: Amazon Machine Image: provides information to launch an instance - template for the route volume (operating system, libraries and utilities). AMI launches the instance,by coping the route volume to the instance and then launch it. it.

AMI can be made available to the public. If you need multiple instances from same. if you need multiple instances with same configuration you can launch multiple AMIs. AMI 1: to implement web server instances and the other one to implement application server instances. Benefits: repeatability, reusability, and you can use it repeatedly. Instances launched from same AMI are exact replicas of each other. AMIs also facilitate recoverability. AMI provide a backup to complete EC2 configuration.

AMI decision to launch an instance:

1) Region where you want to launch the instance at

2) Copy an AMI from one region to another.

3) AMI -> windows vs linux instance - amazon EBS backed (independently of instance life), instance store backed (life of instance).

4) Select your architecture that best fits your workload: 32 BITS or 64 BITS -> either X86 or advanced risk machine or R instruction set.

5) AMI virtualization: 1) Paravirtual (PV) 2) Hardware virtual machine (HVM) - difference is how they boot and whether they can take advantage of special hardware provisions for better performance.

Choosing AMI:

1) QUICK START - LINUX OR WINDOWS

2) AMI's in the market place: AMI's from software vendors You can create your own AMI from EC2 market place.

3) Community owned AMIs

Instance store backed AMI: Instance storage longer boot (16 TB) EBS backed in comparison to instance store backed instance (10 GB).

EC2 console can reboot the console. It maintains the same IP address. From running state you can also terminate the instance. You cant recover the terminated instance. Stopped instance doesn't incur the same cost as running instance. You can also hibernate the instant. Mostly when you re-start a hibernated it starts on a new computer.

An AMI is needed to launch EC2 instance. Choosing an AMI from EC2 instance. Source AMI like Quick start AMI or start from a virtual machine -> using EC2 instance. You can configure it with specific settings and configuration and tools. EBS backed AMI you capture it using EC2 AMI tools and then manually register it. Once registered, its a new starter AMI. Lastly, you can copy the AMI to new EC2 regions.

EC2 image builder: provides simple graphical interface -> you can generate images of only the essential components -> it provides version control -> it allows you to test the image before generating. Source Image -> Build Component (like Python 3, etc.) -> hardening test (security checks, and incompatibility checks by running a sample application)->schedule (how long the pipe line should run) -> Automated distribution (to selected regions).

EC2 instance type (CPU, memory, storage, network performance characteristic) -> depends un work loads and cost limitations -> m is the family name and 5th generation d means additional capability like SSD (instead of hdd)-> in m5d.xlarge (xlarge = extra large)

Instance types:

General purpose: computer memory and gaming servers, analytics applications, development environment.

computer optimized: high performance processors, batch processing, ML, multiplayer gaming, video encoding, C5 and C5n instances

Memory Optimized Instances: in memory caches, high performance databases, big data analytics.

Accelerated Computing Instance: HPC, Graphics, machine learning and artificial intelligence

Storage Optimized: NoSQL, BIG DATA, etc.

There are 270 instance types: choose the latest types, you can modify the instance as recommended, Instance type page on console in combination with AWS computer optimizer to generate ML based recommendations, Under provisioned, Optimized, Overprovisioned. The latest instance types have better price to performance ratios.

User Data: use a script to initialize EC2 instance along with user data. user data is a set of shell commands or cloud directives.

It runs with instance start but before it is accessible on the network. It updates all the packages. EC2 instance logs the output data messages of hte user data. Windows PowerShell scripts are such tools that are used to transfer user data to EC2 instance. If the instance is rebooted, the user data script does not run the second time.

Public IP, Private IP, Host IP, Region, Availability zone, user data specified at launch time. How much preconfiguration should you do. It cincludes everything to serve your work loads. When you launch an instance with this AMI - fully baked AMI (including OC, runtime software, and application itself). it requires no additional bootime configuration. All dependencies are preinstalled. Reduces bootstrap time but increases dependencies buildup time.

The alternative is JEOS(just enough operating system) AMI: just enough to start AMI. Logging, monitoring, and security are performed after launch. OS only AMI. Slow to boot as many dependencies have to be installed later.

Most organizations prefer: hybrid AMI (OS+a few dependencies): are most popular

Adding storage to amazon EC2: Instance store (only be used by the instance itself, ephimeral - buffer, cache, scratch data), EBS (once instance at at time but it can be detached and moved to another computer), Elastic File System (Linux instances - to share data volume among multiple instances), Windows File Server (FSx-to share data volume among multiple instances).

SSD backed Volumes:

GP2: most general work loads

IO1 is high performance - extremely low latency

HDD backed Volumes:

ST1: big data

SC1: freq accessed throughput volumes, large and freq. datasets

EBS: only one instance at a time

S3: its an object store and not a block store. Block store allows changes to individual blocks in a file.

EBS optimized instance: can gain additional performance - high performance - high performance, security, nitro based instance type

EFS: files are accessed to Linux based interface for EC2 instance-- full file access - can be used with any AMIs - can work with more than one EC2 instance - home directory, database backup, big data analytics, media workflows, web surfing, file system for enterprises

FSx: windows file system storage for EC2 instance- NTFS (new technology file system), backed by high performance SSD, DFS (distributed file system), Windows servers, Microsoft active directory, web surfing, software development environment, Big Data Analytics,

Three payment methods:

1) On demand Instances:On demand instance rate

2) Reserved Instances:

3) Savings Plan: 1 year or 3 year term - cheaper to above two

4) Spot Instances: spare amazon EC2 capacity at substanctial savings off On Demand instances prices. Billed rounded up to 60 seconds. All other instances round up to nearest hours.

5) Dedicated Host: when you have specific regulatory compliance requirements. Per host billing, Visibility of sockets , cores, host ID, affinity b/w a host and an instance. Target instance placement. Add capacity by uising an allocation request. Benefit enables you to server bound sofftware licence.

Co-related failure is when many hardwares fail at the same time. Strategy is partition strategy : groups of instances in one partition do not share same underlying hardware as the other group of instances. Cluster placement group - in a single availability zone: higher throughput limit.Tightly coupled node to node communication. Partition placement group are in different racks. Apache

Features:

-> Highly secured - applies security at all layers.

-> Consistent approach -

-> Cost optimization - iterative process. Moving target. Keep lowering your cost.

-> Reliability

-> Performance efficiency - dynamically acquire computing resources to meet the demand. Maximizing your performance per demand. Employing mechanical sympathy.

-> AWS Well - Architected Tool: Gives you best practices, Operational excellence and need based questions -> gives you well informed decisions based on latest and best practices and architecture.

Design keys:

-> Empirical Data comes from load testing.

-> Enable Scalability - when demand is near the capacity, EC2 auto-scaling triggers alarm to launch a new instance before capacity is reached. you will be notified when resource allocation change.

-> Eliminate single point of failure. The application server needs to work even if one hardware component fails. You can This website is hosted on Amazon AWS Cloud S3 secondary or standby server.

-> treat resources as disposable

-> use caching

-> secure entire infrastructure.

This is the benefit over traditional servers, because somehow each server gets configured differently. In some cases, containers, or components solutions are needed instead of Amazon EC2. Other services include - Virtual Private Cloud, Elastic Block Store, FSx.

Cap-ex: you pay for the services whether they are active or inactive.

Variable services pay model- pay only for the services you need and stop paying when you don't use/need them.

AWS Regions:

-> Geographical area

-> AWS backbone

-> Data replication

-> Enable these regions.

-> AWS local zones are connected to AWS regions: Virtual Private Cloud, Elastic Block Store, FSx.

-> Core applications are deployed in N+1 servers to allow for redundancy

-> Points of presence: reginal edge locations - and regional edge caches (absorb the content and provide alternate to regional edge by caching content closer to the user) - throughout the globe to reduce latency.

->

Module 3:

Creating a static website that is hosted on amazon.com. Knowing the business requirements and creating a website using business requirements is the task.

Amazon S3 is object storage service ( unlimited) . Every bucket has a name that is globally accepted. But you cannot use generic names like Hello World. Total storage is unlimited but individual object is maxed out at 5 TB storage. Each object has a key, value, and metadata.

Key is used to retrieve the object. Version ID helps to retrieve the correct version of the object. Object values are unchangeable. If you want to modify the object you must make the change outside S3. REST enable global unique URL to each object.

Durability: 99.999999999 durability (11 9s)

Availability (99.999% of the time - 11 9s)

Scalability - unlimited capacity

Secutiy - fine grained access control

Performance: supported by many designs

S3 -> all S3 buckets are private and protected by default.

Always follow the principle of least privilege -> only give that access to the user that he/she needs.

TOOLS: Block Public Access: simple to manage access to specific users

IAM policies (Identity and access management policies): Good option when user can authenticate using IAM

Bucket Policies: To define access specific bucket or specific objects

ACLs: access control list: predate IAM (less used these days)

S3: Access points: You can configure access with names and permissions specific to each application

Presigned URLs: time limited access (temporarY)

AWS Trusted Advisor: to check any permissions have accidently have been given global access.

Default Settings:

OWNER -> PRIVATE permissions

Public Access -> ANYONE can access to the bucket

CONTROLLED ACCESS- > USER A has access but not USER B

DATA ENCRYPTION: Encrypted Data is unreadable : S3 encrypts the objects before it saves it and decrypts when it retrieves it. This is called server side encryption

STATIC WEBSITE: (no server side processing): versioning keeps multiple versions of the object in the same bucket. This is a low cost solution for website hosting.

S3 Bucket for website hosting -> HTML Files and Images -> S3 - high performance, & scalability.

Versioning - provides a way to recover data. Deleted version is marked up with a delete marker (doesn't exactly delete) -> you can always recover the deleted version.

CORS: cross origin resource sharing -> selectively allow cross origin access to resources in your bucket. Sometimes client access applications in one domain need to access resources in different domain. CORS configuration is xml document with rules to access your bucket.

S3: financial transaction analysis: horizontal data - enables multiple concurrent transactions - when the bid price for spot instances is low.

EC2 (elastic cloud): starts when the bid price for spot instances is low or EMR is started instead. Also, more data can be started from another data sources. Next, the data is run through different applications using different bucket. Next after the data is processed, then the compute capacity is terminated to optimize cost. Lastly, an analytics tool like Quicksite can help you visualize the processed data. Amazon S3 provides

In the example PUT, POST, and DELETE

Amazon EC2 Servers:

S3: objects that are uploaded to one region, are automatically updated to other regions. High Durability and accessibility. Redundant copies should update at the same time- in an ideal world. But there is a lag in the updates in all the copies. This inconsistencies of the data updated copies of the object is transient as some copies are updated, and some are updating. But this inconsistency is resolved really quick. S3 provides read after write consistency -> if you immediately try to read the object that you just updated, there are chances you would see an older version of the copy. So, if you delete some data, S3 may have the old copy still.

5 TB is the maximum size of single object. S3 provides for PUTS and DELETES. S3 is often used as a data store and sometimes used as archive service for critical data.

Amazon S3 Versioning: AWS Management Console -> Find Services - S3 -> AWS Management Console -> Universally unique name -> Select Bucket -> Properties -> Versioning - > bucket -> Enable - > if you delete (by going to ACTIONS) -> it removes the file -> but it still shows up in the "SHOW VERSIONS" with a DELETE POST TEXT.

S3: Storage data in amazon S3. S3 standard works well for websites, applications, big data analytics. Lower cost option for freq. access data.

S3 STANDARD IA (infrequent access): min 30 day fee. Storing older log files. Non critical data.

S3 Glacier

3 options for retrieving data:

Expedited - 1 to 5 minutes

standard - 3- 5 hours

bulk - 5-12 hours.

Deep archive: 3 copies at three different geographical locations. S3 monitors the access patterns for your objects. If the object in the IA tier accessed it automatically moves it to appropriate tier. The storage class is lowered based on the information retrieval rate.

Moving data into and out of S3: AWS management console - data can be moved by drag and drop commands.

Multi-part upload : min size 5 mb; allows to pause and resume; upto 5 TB

CLI Command: cp = copy

cp test.txt\s3://AWSDOC-Example-Bucket/test.txt (which file to upload and where to upload)

benefits: improved throughput

Amazon S3 transfer acceleration -> 50 to 500% improvement -> edge locations -> data is routed through optimized network path based on your location

It becomes useful when you have unused bandwidth available.

AWS snowball -> Petabyte scale data transport -> data vehicle

AWS Snowmobile -> exabyte scale -> pulled by semi trailer truck -> because it would otherwise take 3 years even with 10 GBPS speed over the internet.

Dedicated security personal and other features.

Architecture that runs a dynamic AWS application that runs with EC2: The cafe wants to include online ordering for customers. Their current architecture is S3 (simple architecture) which wont support this online ordering and viewing the orders.

Available compute options: virtual machine, serverless, containers, and

Virtual machines: EC2 - secure and resizable services with complete control

Lightsale: for application or website + cost effective monthly plan, simpler workloads

Containers: AWS elastic containers service, software package needed to run an application

Web application and services like Java, Ruby,

Serverless: Lambda and Fargate: docker services, without creating or managing servers you only pay when you manage servers.

AWS outpost:

AWS batch: runs AWS batch jobs of any scale

PAAS: enables quick deployment

EC2: resizable compute capacity and we can completely control the computing resources + you pay only for the capacity that you use. Its like elastic waist band that stretches automatically when the demand increases.

Most users run applications - there elasticity becomes handy. The CPU and RAM are in the cloud. These run as virtual machines which either run Amazon Linux or microsoft windows. You can run applications on each virtual machines or multiple virtual machines. Hipervisor is a platform layer that provides acccess to actual hardwares like RAM, storage, etc. It provides ephimeral storage. For the boot disk and

Some EC2 store use instance storage, the data is deleted when the instance is stopped. EC-2 block storage : EBS: the data will still be there even if the instance is restarted.

AMI: Amazon Machine Image: provides information to launch an instance - template for the route volume (operating system, libraries and utilities). AMI launches the instance,by coping the route volume to the instance and then launch it. it.

AMI can be made available to the public. If you need multiple instances from same. if you need multiple instances with same configuration you can launch multiple AMIs. AMI 1: to implement web server instances and the other one to implement application server instances. Benefits: repeatability, reusability, and you can use it repeatedly. Instances launched from same AMI are exact replicas of each other. AMIs also facilitate recoverability. AMI provide a backup to complete EC2 configuration.

AMI decision to launch an instance:

1) Region where you want to launch the instance at

2) Copy an AMI from one region to another.

3) AMI -> windows vs linux instance - amazon EBS backed (independently of instance life), instance store backed (life of instance).

4) Select your architecture that best fits your workload: 32 BITS or 64 BITS -> either X86 or advanced risk machine or R instruction set.

5) AMI virtualization: 1) Paravirtual (PV) 2) Hardware virtual machine (HVM) - difference is how they boot and whether they can take advantage of special hardware provisions for better performance.

Choosing AMI:

1) QUICK START - LINUX OR WINDOWS

2) AMI's in the market place: AMI's from software vendors You can create your own AMI from EC2 market place.

3) Community owned AMIs

Instance store backed AMI: Instance storage longer boot (16 TB) EBS backed in comparison to instance store backed instance (10 GB).

EC2 console can reboot the console. It maintains the same IP address. From running state you can also terminate the instance. You cant recover the terminated instance. Stopped instance doesn't incur the same cost as running instance. You can also hibernate the instant. Mostly when you re-start a hibernated it starts on a new computer.

An AMI is needed to launch EC2 instance. Choosing an AMI from EC2 instance. Source AMI like Quick start AMI or start from a virtual machine -> using EC2 instance. You can configure it with specific settings and configuration and tools. EBS backed AMI you capture it using EC2 AMI tools and then manually register it. Once registered, its a new starter AMI. Lastly, you can copy the AMI to new EC2 regions.

EC2 image builder: provides simple graphical interface -> you can generate images of only the essential components -> it provides version control -> it allows you to test the image before generating. Source Image -> Build Component (like Python 3, etc.) -> hardening test (security checks, and incompatibility checks by running a sample application)->schedule (how long the pipe line should run) -> Automated distribution (to selected regions).

EC2 instance type (CPU, memory, storage, network performance characteristic) -> depends un work loads and cost limitations -> m is the family name and 5th generation d means additional capability like SSD (instead of hdd)-> in m5d.xlarge (xlarge = extra large)

Instance types:

General purpose: computer memory and gaming servers, analytics applications, development environment.

computer optimized: high performance processors, batch processing, ML, multiplayer gaming, video encoding, C5 and C5n instances

Memory Optimized Instances: in memory caches, high performance databases, big data analytics.

Accelerated Computing Instance: HPC, Graphics, machine learning and artificial intelligence

Storage Optimized: NoSQL, BIG DATA, etc.

There are 270 instance types: choose the latest types, you can modify the instance as recommended, Instance type page on console in combination with AWS computer optimizer to generate ML based recommendations, Under provisioned, Optimized, Overprovisioned. The latest instance types have better price to performance ratios.

User Data: use a script to initialize EC2 instance along with user data. user data is a set of shell commands or cloud directives.

It runs with instance start but before it is accessible on the network. It updates all the packages. EC2 instance logs the output data messages of hte user data. Windows PowerShell scripts are such tools that are used to transfer user data to EC2 instance. If the instance is rebooted, the user data script does not run the second time.

Public IP, Private IP, Host IP, Region, Availability zone, user data specified at launch time. How much preconfiguration should you do. It cincludes everything to serve your work loads. When you launch an instance with this AMI - fully baked AMI (including OC, runtime software, and application itself). it requires no additional bootime configuration. All dependencies are preinstalled. Reduces bootstrap time but increases dependencies buildup time.

The alternative is JEOS(just enough operating system) AMI: just enough to start AMI. Logging, monitoring, and security are performed after launch. OS only AMI. Slow to boot as many dependencies have to be installed later.

Most organizations prefer: hybrid AMI (OS+a few dependencies): are most popular

Adding storage to amazon EC2: Instance store (only be used by the instance itself, ephimeral - buffer, cache, scratch data), EBS (once instance at at time but it can be detached and moved to another computer), Elastic File System (Linux instances - to share data volume among multiple instances), Windows File Server (FSx-to share data volume among multiple instances).

SSD backed Volumes:

GP2: most general work loads

IO1 is high performance - extremely low latency

HDD backed Volumes:

ST1: big data

SC1: freq accessed throughput volumes, large and freq. datasets

EBS: only one instance at a time

S3: its an object store and not a block store. Block store allows changes to individual blocks in a file.

EBS optimized instance: can gain additional performance - high performance - high performance, security, nitro based instance type

EFS: files are accessed to Linux based interface for EC2 instance-- full file access - can be used with any AMIs - can work with more than one EC2 instance - home directory, database backup, big data analytics, media workflows, web surfing, file system for enterprises

FSx: windows file system storage for EC2 instance- NTFS (new technology file system), backed by high performance SSD, DFS (distributed file system), Windows servers, Microsoft active directory, web surfing, software development environment, Big Data Analytics,

Three payment methods:

1) On demand Instances:On demand instance rate

2) Reserved Instances:

3) Savings Plan: 1 year or 3 year term - cheaper to above two

4) Spot Instances: spare amazon EC2 capacity at substanctial savings off On Demand instances prices. Billed rounded up to 60 seconds. All other instances round up to nearest hours.

5) Dedicated Host: when you have specific regulatory compliance requirements. Per host billing, Visibility of sockets , cores, host ID, affinity b/w a host and an instance. Target instance placement. Add capacity by uising an allocation request. Benefit enables you to server bound sofftware licence.

Co-related failure is when many hardwares fail at the same time. Strategy is partition strategy : groups of instances in one partition do not share same underlying hardware as the other group of instances. Cluster placement group - in a single availability zone: higher throughput limit.Tightly coupled node to node communication. Partition placement group are in different racks. Apache